How ChatGPT Can Help Venture Capitalists Make Better Investment Decisions

I recently attended a talk by MIT professor David Rand, a leading researcher on misinformation and the psychology of belief. Rand’s findings challenged my assumptions about confirmation bias—a concept I’ve called “VC’s poison pill” as it often is the root cause of bad investment decisions. Confirmation bias is a systemic issue that can cloud judgment at every stage of the VC process, from deal flow analysis to exploratory due diligence to investment committee reviews to portfolio monitoring.

Rand’s research raised an intriguing question: What if confirmation bias isn’t as unbreakable as we think? Focused on conspiracy theories, his work suggests that carefully structured, AI-driven interactions can shift even deeply entrenched beliefs. This breakthrough made me wonder—could AI-based tools help VCs see past their initial judgments and make better investment decisions?

In this article, I’ll share insights from Rand’s research and test its implications on a real-life example—a firmly held belief, almost a conspiracy theory, from a VC. We’ll explore whether tools like ChatGPT could be a solution for Venture Capitalists looking to challenge their own biases and make better investment decisions in a field notoriously shaped by intuition and early judgments.

In This Article

Confirmation Bias in VC: A Barrier To Sound Investment Decisions

Confirmation bias—the tendency to favor information that confirms pre-existing beliefs and ignor contrary data—is a known challenge in Venture Capital. I’ve explored it in several articles on this website, showing that it can subtly influence VCs at every stage of the investment process.

During the due diligence phase, confirmation bias can undermine the effort to discover potential issues with the startup’s claims. In the case of Theranos, many Investors were influenced by the high-profile endorsements the company had received and overlooked critical red flags in both its technology and financials. Rather than conducting thorough technological audits or consulting former employees, some Investors engaged in “proxy due diligence,” assuming that backing from Walgreens and influential Board members validated Theranos’s product. This reliance on external signals allowed Holmes’s claims to go unchallenged and enabled a distorted view of the company’s capabilities to persist. This high-profile case illustrates how confirmation bias in due diligence can obscure objective assessment, emphasizing the importance of skepticism and rigorous data verification at every stage. Read the article below for more details.

In Investment Committee meetings, confirmation bias may appear when VCs become attached to Founders. The back-and-forth with the entrepreneurs builds rapport, which is essential for a long-term partnership but also risks clouding objective judgment. In my article on best practices in ICs (see below), I discuss how experienced VC firms employ data-driven analysis and structured IC memos to force a balanced view and prompt the committee to consider potential downsides.

Reinvestment decisions, specifically bridge financing, are another area where confirmation bias plays a critical role. When a startup underperforms, VCs face a tough choice: cut losses or reinvest. As I noted in my article on bridge financing, confirmation bias can push VCs to throw more money at struggling ventures, hoping they can turn things around. Here, bias can make it difficult for VCs to accept clear signs that the startup may not recover. Structured, data-focused decision-making tools help by framing each choice around measurable risks and mitigants. Here’s the article for a deeper dive.

Confirmation bias may not only lead to bad investment decisions (errors of commission) but can also lead to significant misses (errors of omission). When VCs let bias shape their decision process, they risk missing out on more promising opportunities.

This is precisely where AI tools like ChatGPT could provide an advantage, checking our natural tendency toward bias. By bringing in objective insights and challenging assumptions, these tools may help VCs make better, more balanced investment decisions.

MIT’s David Rand co-authored a study that may show us how to do it.

Is Confirmation Bias Underrated? MIT Thinks So

Recent research from MIT Professor David Rand and his colleagues offers a surprising take on confirmation bias, suggesting it may be more flexible—and overrated—than traditionally thought.

Rand and his co-authors’ study, centered on AI’s role in countering entrenched beliefs, was initially aimed at conspiracy theories. His findings revealed that tailored, data-driven dialogues could shift even deeply held beliefs by around 20%. Rand’s work challenges the view that confirmation bias is immovable, suggesting instead that when presented with clear, personalized evidence, people may be more open to changing their views than expected.

In the study, participants first shared their conspiratorial beliefs and the evidence they felt supported these views. Then, an AI chatbot (ChatGPT powered, at the time, by GPT-4-turbo) engaged them in a structured conversation, presenting counter-evidence and fact-based rebuttals specifically addressing the participant’s points.

This approach was designed not to persuade directly but to present objective facts, allowing participants to reconsider on their own terms. By reframing the interaction in a neutral, non-confrontational way, the AI reduced defensive reactions—a critical factor in breaking through confirmation bias.

Find more of David Rand’s research on MIT’s website.

To be clear, Rand and colleagues don’t mention confirmation bias in their work. A legitimate question is: What is the relationship between conspiracy theories and confirmation bias?

Research has shown that conspiracy theories thrive on several cognitive biases, which act as psychological shortcuts simplifying complex issues. Conspiracy-related biases include proportionality bias, the tendency to believe that significant events must have equally significant causes, and intentionality bias, where people assume someone plans events. Together, these biases create a mindset highly resistant to disconfirming evidence.

Confirmation bias is central to the development and endurance of conspiracy theories. It drives individuals to seek, interpret, and remember information that aligns with their pre-existing beliefs, while dismissing any contradictory evidence. This is crucial for conspiracy theories, where selective information processing leads believers to seek out and prioritize content that confirms their views, reinforcing these ideas. When confronted with opposing evidence, they may even double down on their original opinions, a phenomenon known as the “backfire effect.”

Maybe confirmation bias is overrated.

David Rand – MASSACHUSETTS Institute of technology

At the conference, I asked David Rand how he explained his and his colleagues’ results. In my experience, people don’t change their minds easily. He replied that their findings suggest that, under the right conditions, confirmation bias may not be as rigid as it seems.

It was an eye-opening moment for me. Rather than engaging in confirmation bias myself and shrugging off these findings—I wrote quite a few articles on confirmation bias in VC and could feel vested in the concept—I started analyzing Rand‘s methodology to find a way to integrate it into my VC training.

Tools like ChatGPT, prompted and used right, may serve as a valuable “second opinion” in due diligence, allowing Investors to step back and re-evaluate evidence with fresh eyes.

This approach could represent a powerful tool for VCs to challenge their assumptions.

Since I aim here to develop a methodology to help VCs question their biases, the first step is to try DebunkBot, the ChatGPT tool developed by Costello, Pennycook, and Rand in their experiment.

How DebunkBot Works

The DebunkBot process is structured into three main phases to evaluate and potentially reduce belief in conspiracy theories:

- Initial Belief Rating: Participants are asked to rate their confidence in a particular conspiracy theory on a scale from 0 to 100%. This step captures their initial stance on the plausibility of the theory.

- AI Interaction: The AI engages in a back-and-forth dialogue with the participant (3 interactions), addressing their concerns with factual counterpoints and promoting critical thinking. Participants can provide input or ask questions, which the AI then responds to with clarifying information and reasoned analysis.

- Post-Interaction Belief Reassessment: After the conversation, participants rate their belief in the conspiracy theory again. This second rating measures any shifts in their conviction due to the interaction. Feedback is then provided, including insights on the theory’s plausibility, the degree of belief change, and a comparison with other participants’ responses.

Let’s illustrate with a real interaction between one of the original study’s participants and DebunkBot.

During the conference at MIT, Rand shared an example of the structured conversation between DebunkBot and a participant regarding a 9/11 conspiracy theory. The participant held a belief that the 9/11 attacks were orchestrated with the aid of controlled demolitions, a common conspiracy theory. In the AI’s response, the bot addressed specific points using evidence-based information—citing findings from institutions like the National Institute of Standards and Technology (NIST) on why the collapse was due to structural failures caused by the impact and fires, not explosives.

The conversation illustrates a key part of Rand’s approach: the AI doesn’t confront or directly dismiss the participant’s belief. Instead, it calmly presents factual information, like the melting point of steel and the physics of structural collapse, in a way that encourages the participant to re-evaluate their stance. This non-adversarial, fact-based method allows the AI to engage constructively, lowering defensiveness and creating an opening for reflection.

Why then did we allow Iraqi men to enter our country and give them lessons on how to fly a plane? They also got past security fairly easily without question.

Participant in the Debunkbot experiment

The participant’s follow-up question, which reveals underlying suspicions about foreign influence in U.S. security, highlights the persistence of such beliefs even after receiving factual counterpoints—demonstrating both the challenge and promise of AI in shifting entrenched views.

Testing DebunkBot With A VC-Generated Conspiracy Theory

Most people believe VCs to be highly analytical and based professionals, not “lunatics” harboring crazy conspiracy theories. Well, it just happens that the very same day I attended MIT’s conference, a startup Investor presented one such theory to me. (Let me preface this by clarifying that conspiracy theories may be true—I’m not judging the foundation of that VC’s beliefs.)

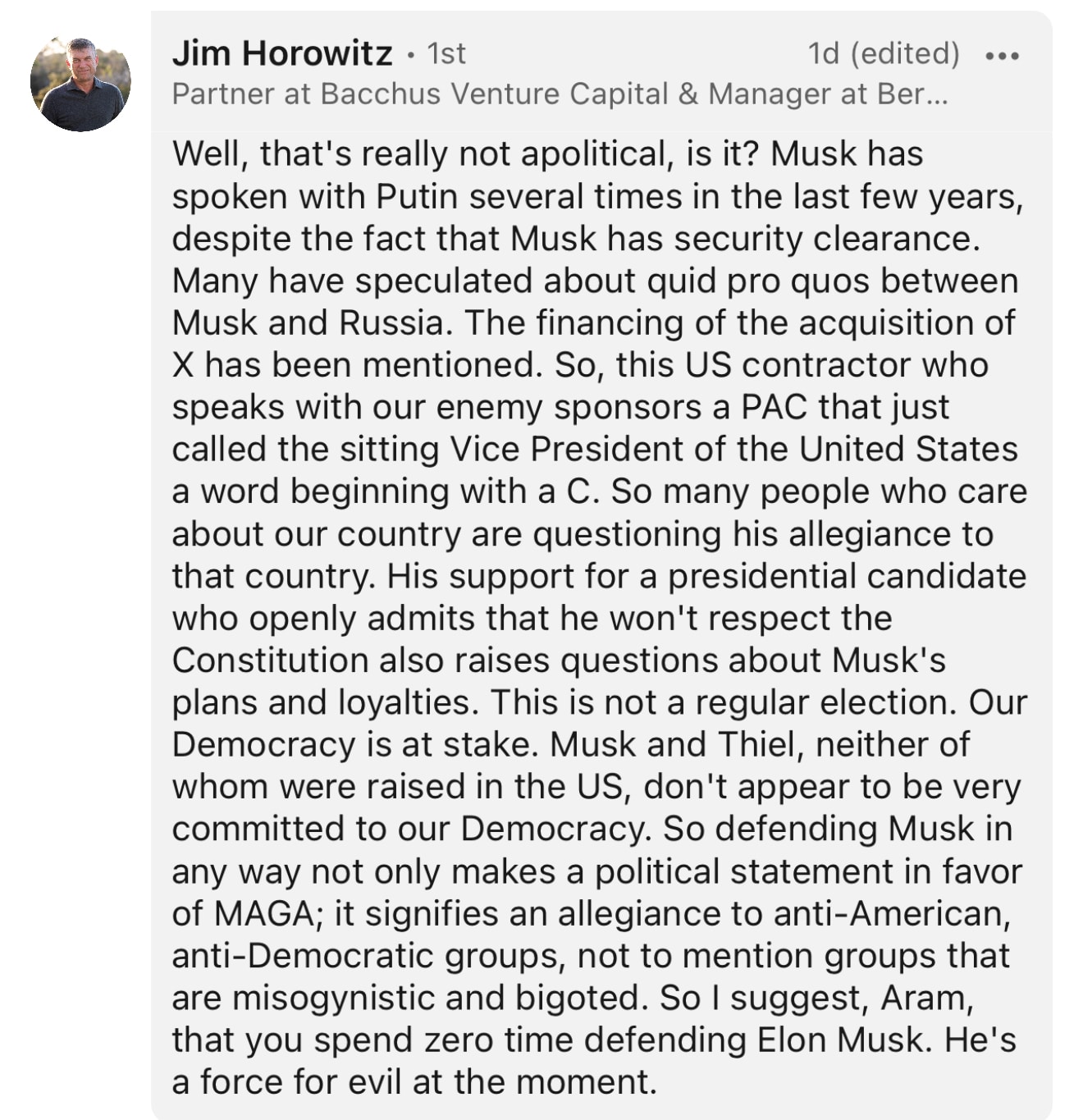

I posted a seemingly straightforward LinkedIn analysis discussing Elon Musk’s political involvement, keeping it—I thought—strictly non-partisan. But it didn’t take long for a VC to jump into the conversation with an intense, layered conspiracy theory about Musk’s alleged affiliations.

It got me thinking: can the techniques used to counter conspiracy theories with AI also challenge the biases that sometimes cloud even seasoned Investors’ judgments?

I decided to test DebunkBot with the conspiracy theory shared by the VC on my LinkedIn post, by putting myself in the shoes of that person to imagine potential rebuttals he would make. Here’s a concise description of my interaction with DebunkBot, capturing the flow of our dialogue and illustrating how the bot operates.

After initiating the experiment with a loaded statement about Elon Musk’s alleged influence over American politics—citing speculations about his connections with Russia and support for controversial figures—DebunkBot responded by unpacking each claim. It started with acknowledging my concerns, distinguishing between speculation and evidence, and highlighting the need for concrete proof when discussing potentially harmful affiliations.

I suggested that Musk’s support for some political campaigns would harm democracy. DebunkBot’s response emphasized the democratic right of individuals, including public figures, to express political preferences. It cautioned against equating personal endorsements with an existential threat to democracy, advocating instead for a focus on verified actions rather than assumptions about intent.

In my following prompt, I doubled down, stating that Musk’s wealth and ownership of Twitter presented a unique risk to democratic processes. DebunkBot’s response addressed the broader debate around wealthy individuals in politics and the complexities of social media influence, encouraging a balanced view. It reminded me that social media platforms serve diverse perspectives and are subject to scrutiny and analysis, not solely shaped by individual agendas.

Throughout, DebunkBot remained steady in its approach, focusing on critical thinking, democratic principles, and the importance of evidence. It provided calm, fact-centered rebuttals without dismissing my points, aiming to guide me toward a more evidence-based perspective. This structured interaction highlighted how AI could potentially de-escalate conspiratorial thinking by gently steering the conversation back to facts and broader civic principles.

By the end, DebunkBot encouraged a more constructive focus on strengthening democratic institutions rather than assigning undue weight to any one individual’s actions or potential agendas. Through this experiment, I observed firsthand how AI like DebunkBot can be used to address confirmation bias in an organized, respectful manner.

Interestingly, DebunkBot adopted the same approach I did in my original X-thread on Musk’s involvement, providing facts and videos to substantiate my claim that he was applying a tested playbook with modern means.

I must admit that DebunkBot kept a more neutral tone, which would have prevented triggering others if I could have done it, too.

Can A “VCBot” Help VCs Make Better Investment Decisions?

The potential to apply a tool like DebunkBot to Venture Capital investing is intriguing, particularly given the well-documented impact of confirmation bias in investment decisions.

Critical stages of the VC process—such as initial deal flow evaluations, exploratory due diligence, and Investment Committee (IC) reviews—are highly susceptible to bias. At each point, the instinct to confirm initial impressions can narrow the scope of analysis, influencing the data VCs seek, interpret, and act on.

This aligns with a foundational principle I believe must be applied by all Investors making investment decisions or recommendations: delaying intuition to collect more data. As I discussed in my article on intuition versus data in Venture Capital (see below), Investors often over-rely on their intuition, blind to the notion that “expert intuition” doesn’t work well in fields such as VC.

When VCs allow early impressions to dominate, they risk falling into the trap of confirmation bias, selectively gathering information that supports these initial beliefs rather than challenging them. In these cases, even rigorous DD can be skewed, as the data collection itself becomes a biased search for validation.

By guiding VCs to consider contrary data and question their interpretations actively, an AI-based tool like DebunkBot could ensure that the data collection process remains objective and aligned with the VC firm’s criteria for success.

One promising approach might involve testing a tailored “VCBot” in the field. Unlike a general debiasing tool, this AI would be trained specifically on cognitive biases relevant to VC decision-making, along with insights on the factors that align with successful investment outcomes. VCBot could be calibrated to the firm’s investment thesis and operational culture.

Embedding VCBot into the workflow could prompt VCs to engage in a structured dialogue that checks their initial assumptions, challenges their interpretations, and encourages a broader, data-driven perspective at crucial decision points.

Rather than a quick three-round exchange, VCBot could involve a more extensive, adaptive questioning session that unfolds based on the specifics of each deal. Rand and colleagues showed that personalization is crucial in their methodology’s effectiveness.

Testing VCBot In The Field

To explore this further, I plan to conduct a study with MBA students who have prior VC experience and are training to enter the field. Through simulations, we’ll investigate how well a tool like VCBot can support these emerging Investors in recognizing and mitigating confirmation bias.

By gathering insights from these simulations, I hope to understand both the potential and the limitations of debiasing AI tools in real-world VC contexts. This study could lay the groundwork for implementing a VCBot in a live environment, allowing us to assess its value practically and measure a tangible impact on investment outcomes.

In an industry where high-stakes decisions are often driven by fast-moving market signals and inherent biases, integrating a structured debiasing method like VCBot could mark a significant step forward. If successful, it wouldn’t just help VCs make better individual investments—it could also create a culture of deeper critical thinking and objectivity within firms, ultimately contributing to better performance returns.

Contact me on LinkedIn if you’re interested in this approach! Stay tuned for more on this fascinating topic—subscribe to the newsletter below for updates.

Conclusion: tl;dr

The growing field of AI-driven debunking tools, originally designed to counter conspiracy theories and misinformation, holds promising potential for Venture Capital decision-making.

By addressing confirmation bias—a pervasive issue at critical stages of the VC process like initial evaluation, due diligence, and Investment Committee reviews—these tools could help VCs approach investment opportunities with greater objectivity.

Just as the MIT-built DebunkBot nudges users to question their assumptions and broaden their perspectives, a tailored “VCBot” could guide Investors to challenge initial impressions, consider contradictory data, and delay intuition-based judgments in favor of a more data-driven analysis.

Further research and testing—such as my study planned with MBA students—will help determine how effectively these AI-based methods can be applied. Integrating debiasing AI tools into the VC process may enhance decision quality, resulting in better performance outcomes.